behind the scenes

information on the instance

As this is a community project, I thought it would be a good idea to give some information about what is at the back of your browser or app. Every once in a while I will update the information. Let me know in case you would have more insight about a certain subject.

The US elections in 2024 made it clear, that privacy will be even more important than it already was. An assessment was made and all services for mountains.social that were hosted on US platforms were migrated to the EU, where the GDPR (General Data Protection Regulation) is in affect. This will also be the strategy moving forward. An exception might be services in Switzerland, where the HQ of mountains.social is also located. The nFADP (New Swiss Data Protection Act) is however very comparable with the GDPR.

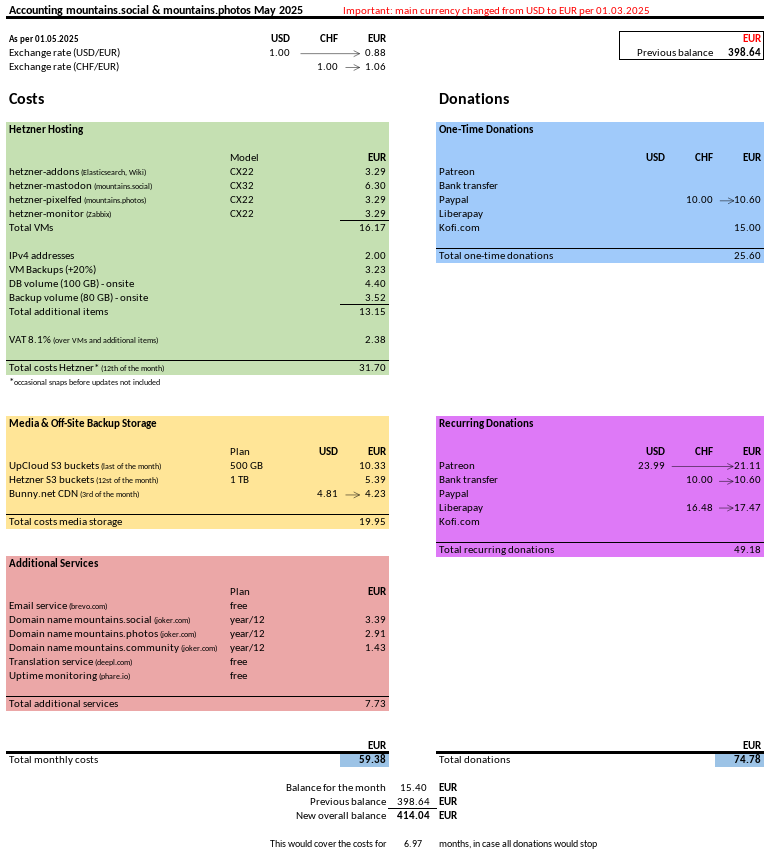

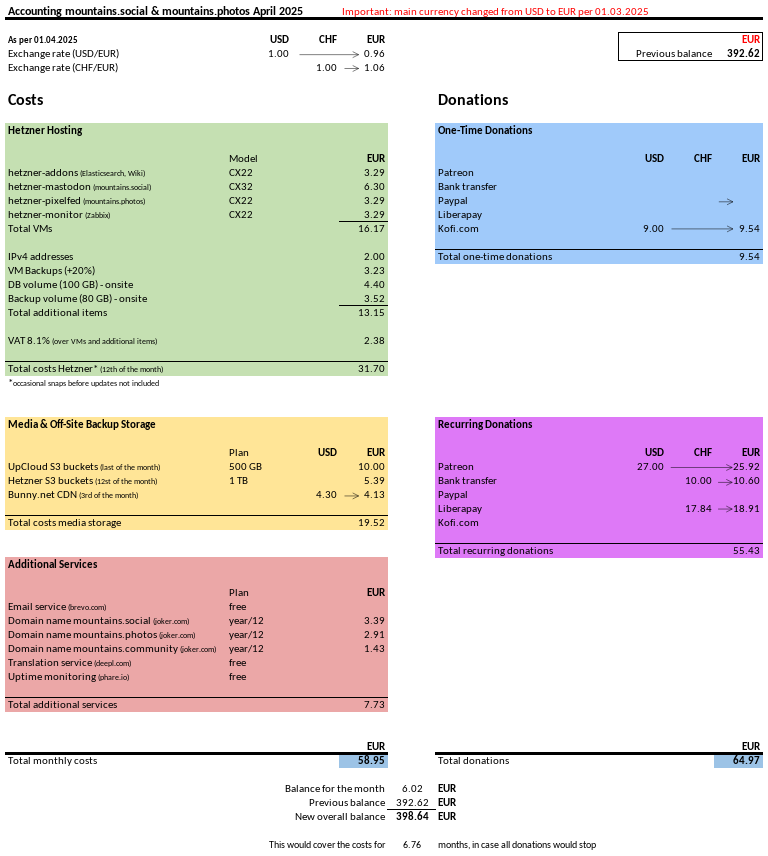

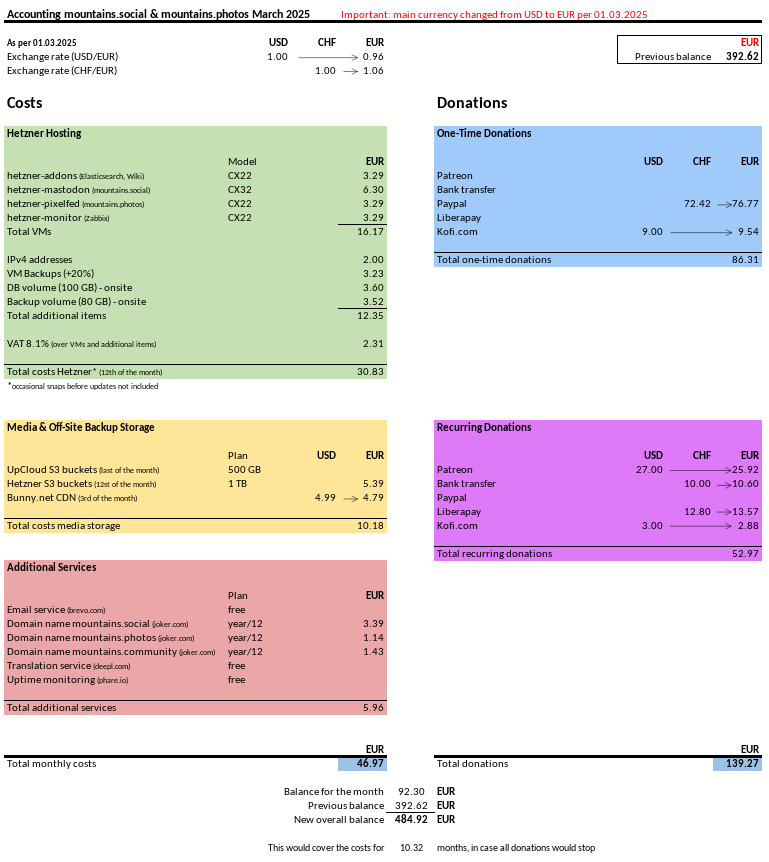

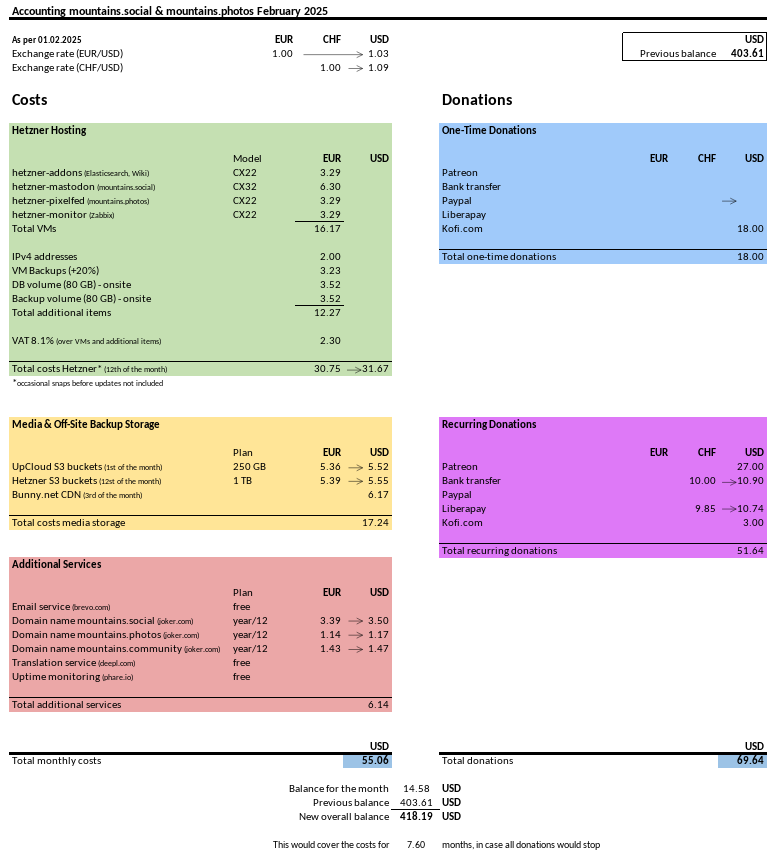

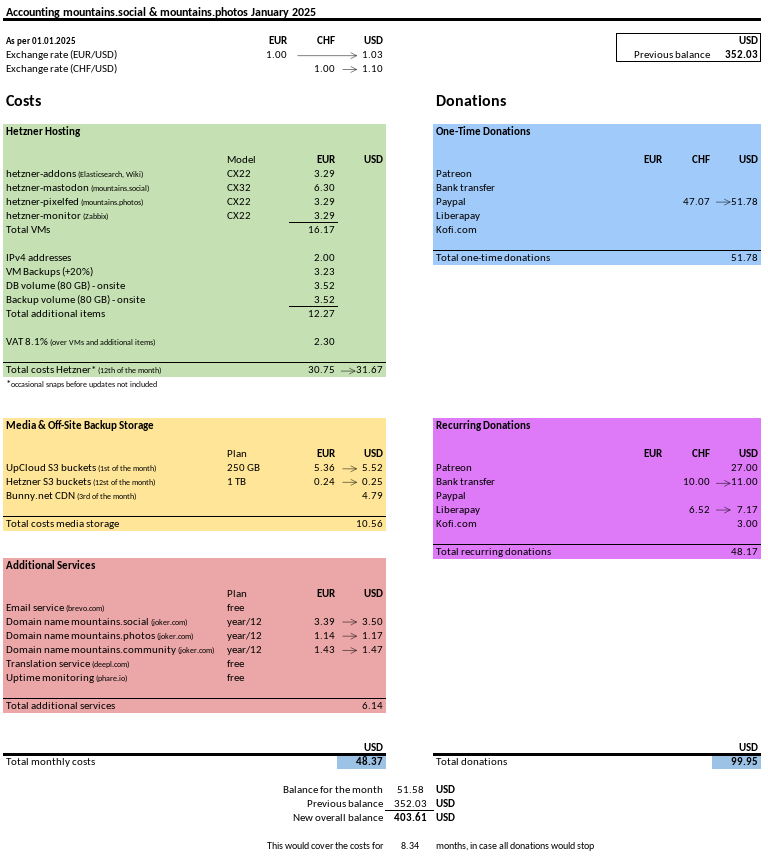

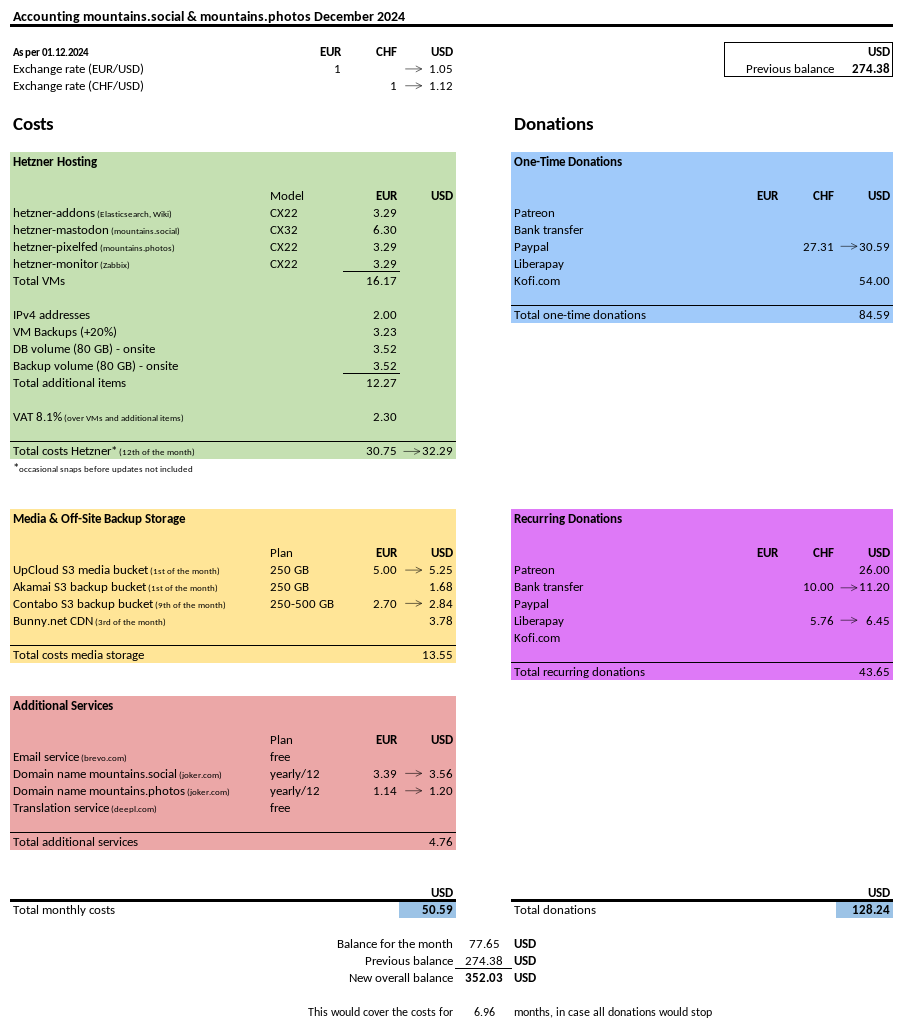

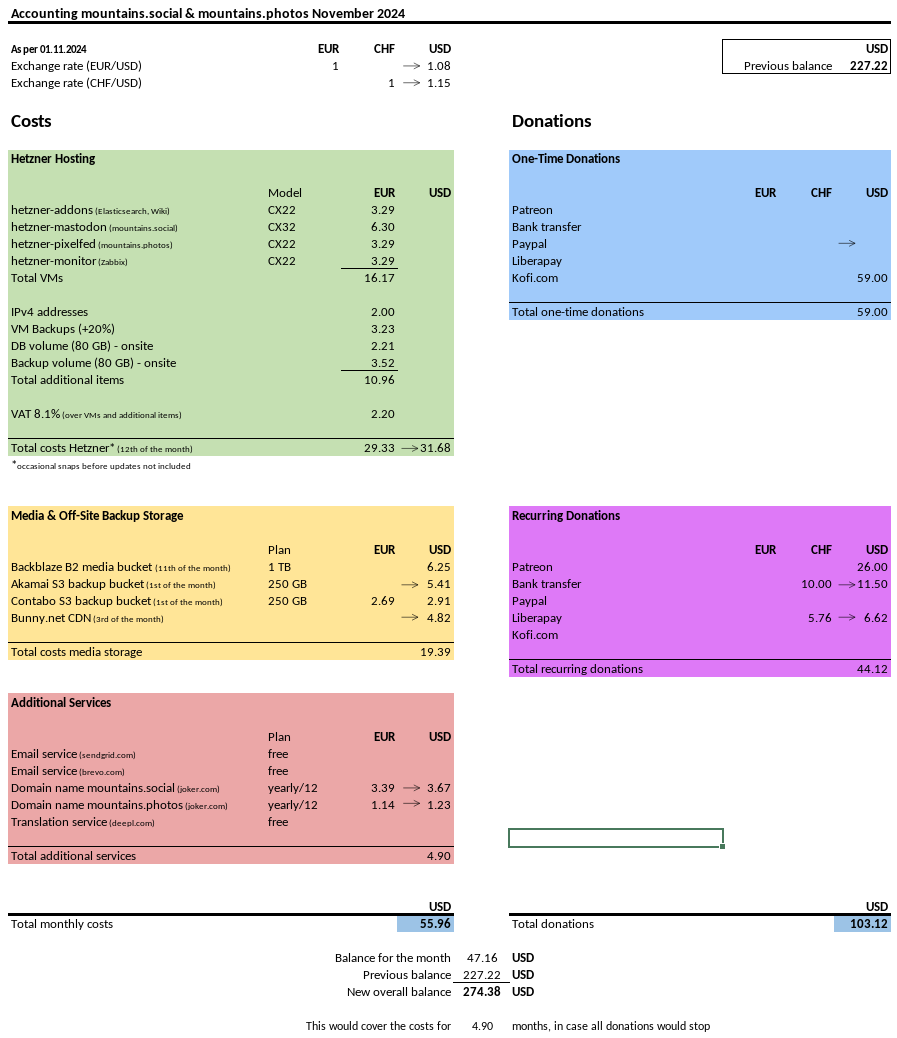

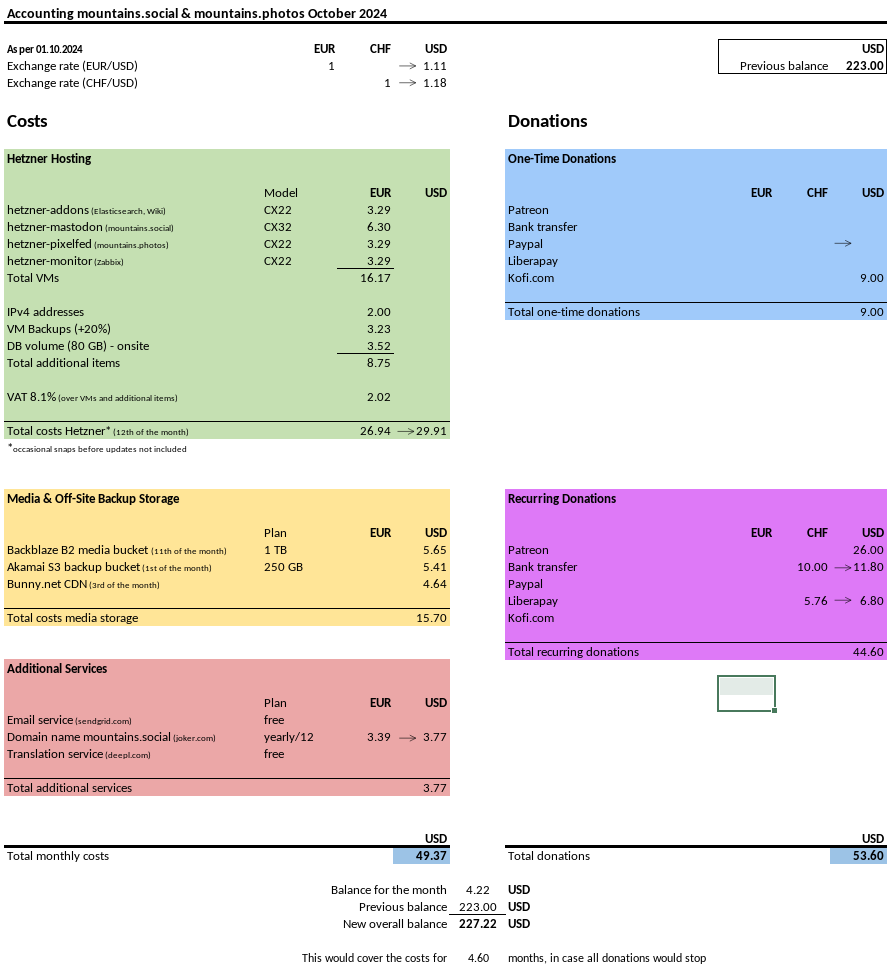

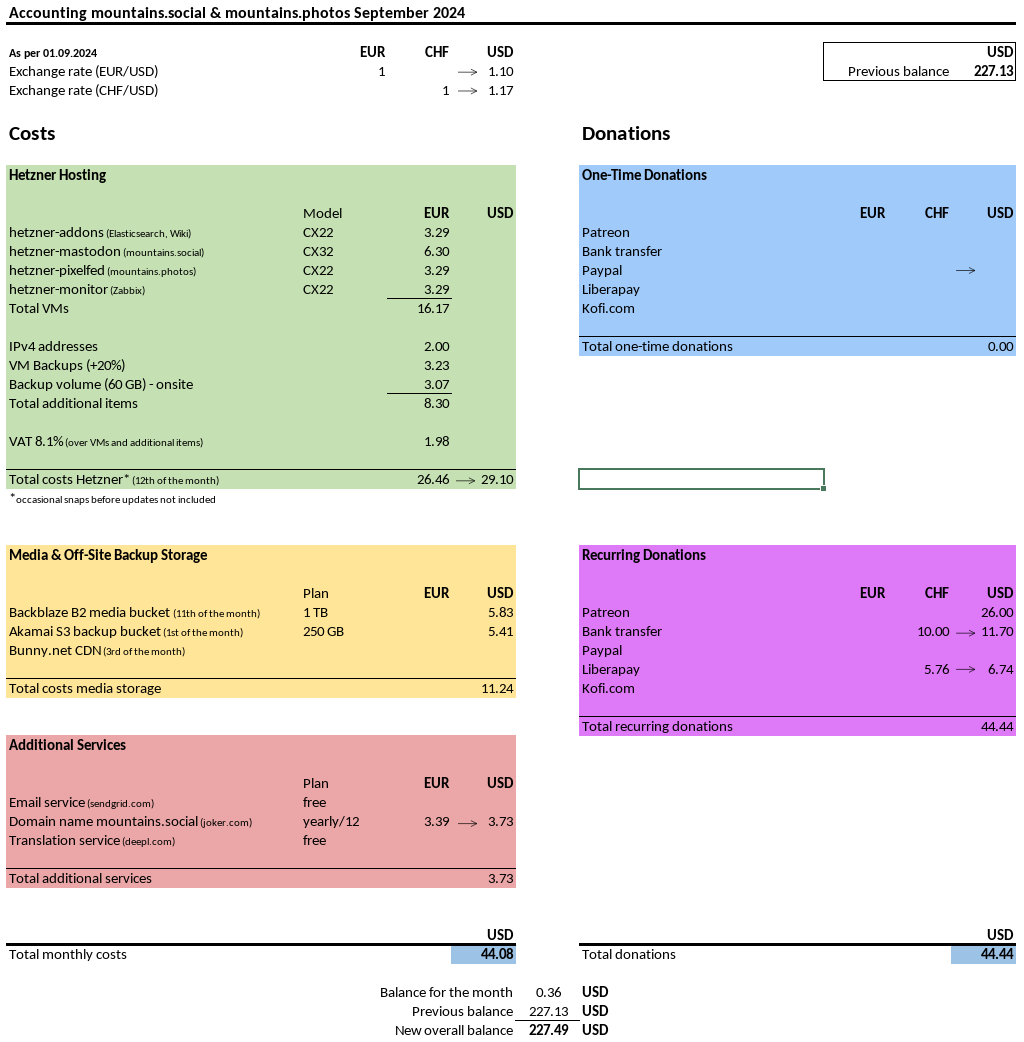

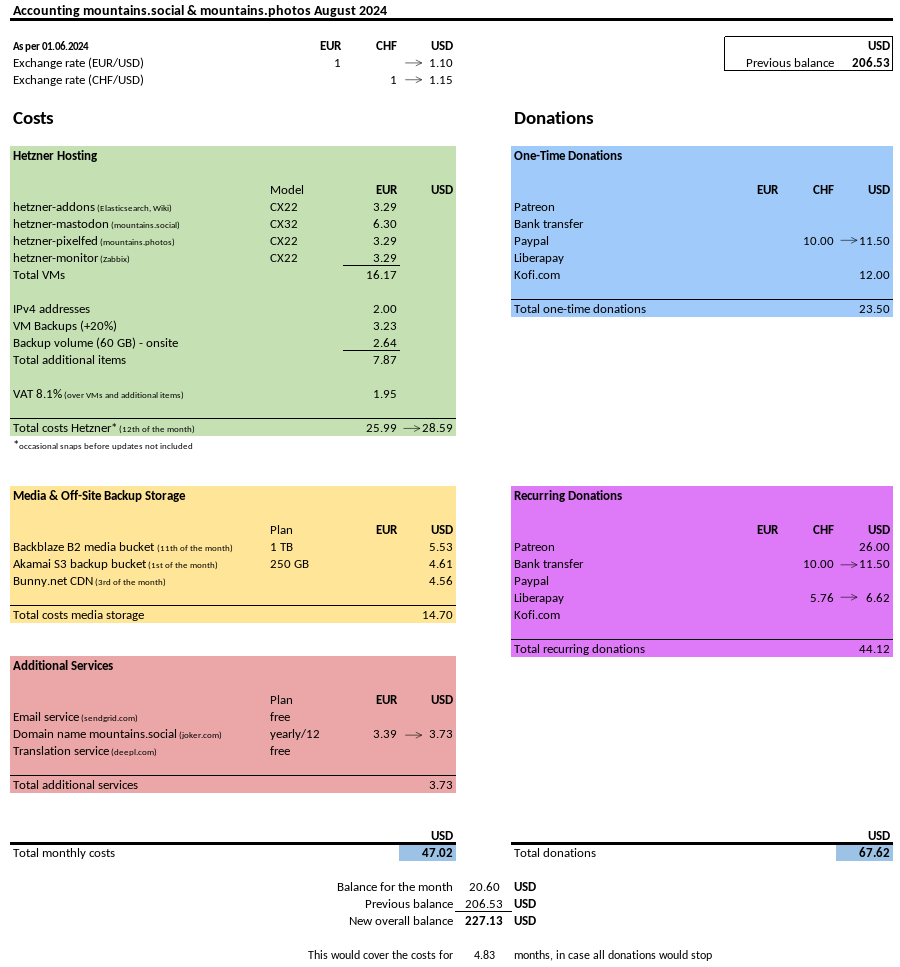

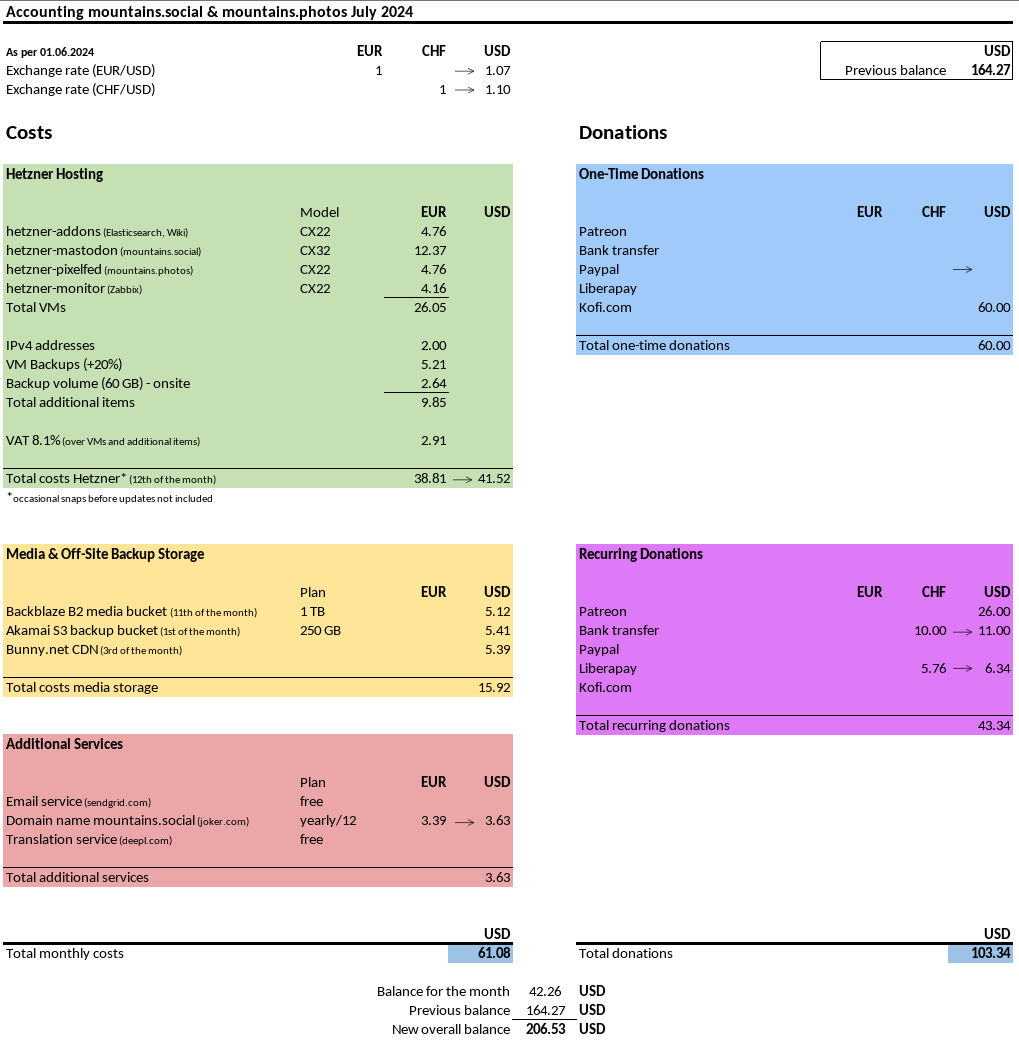

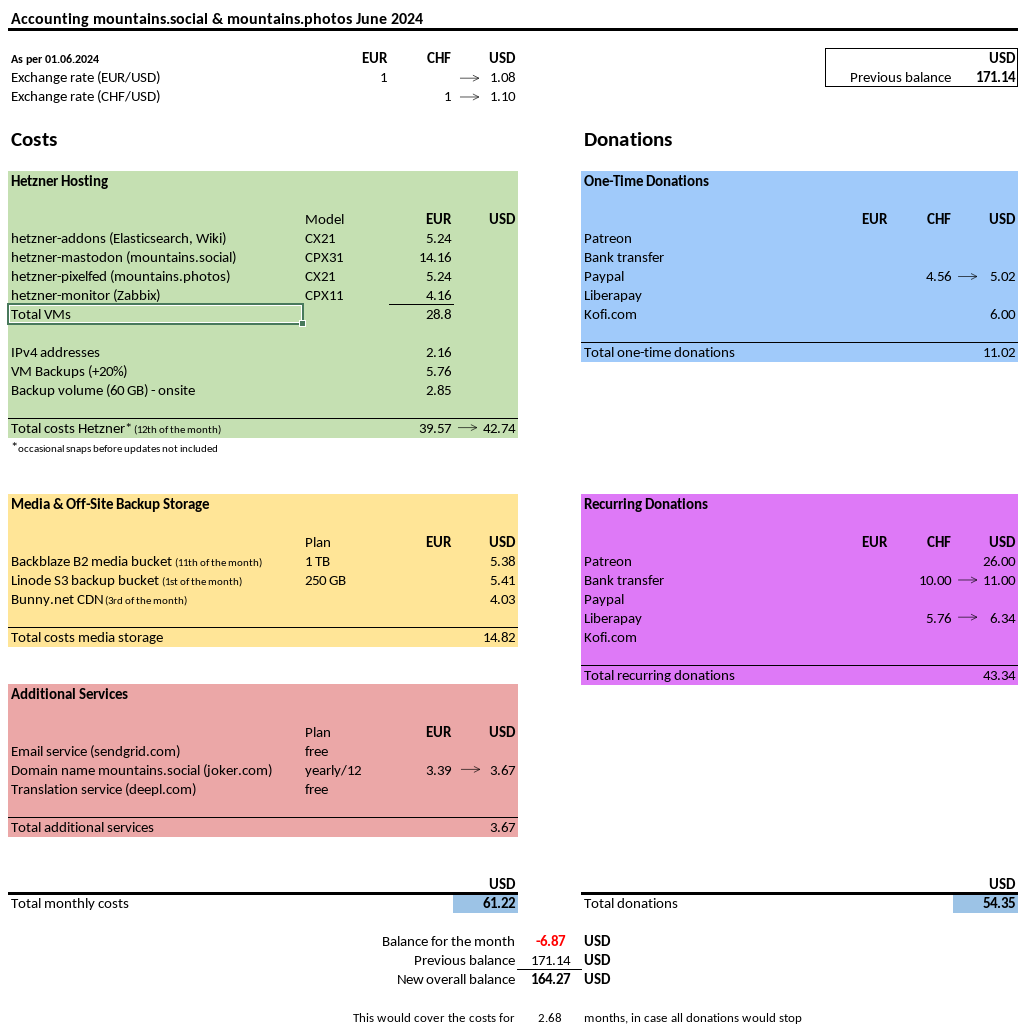

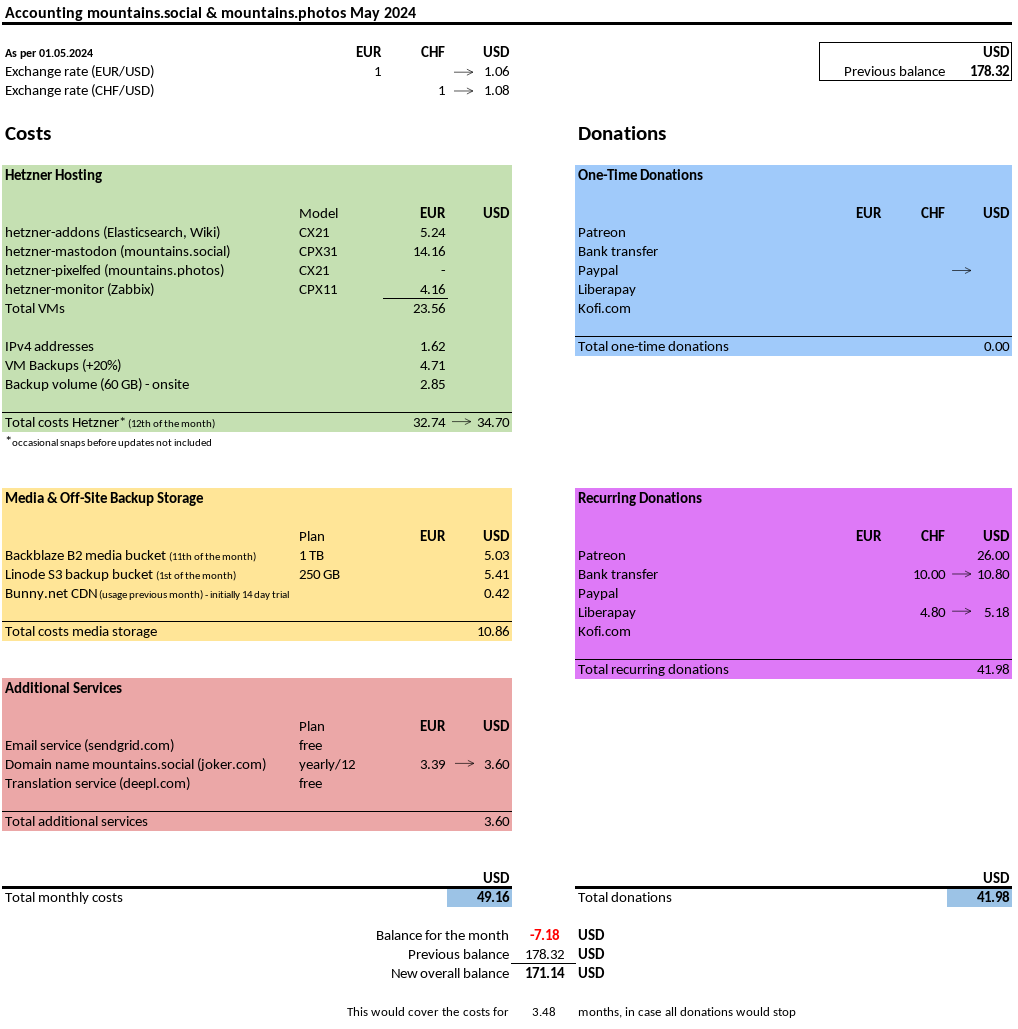

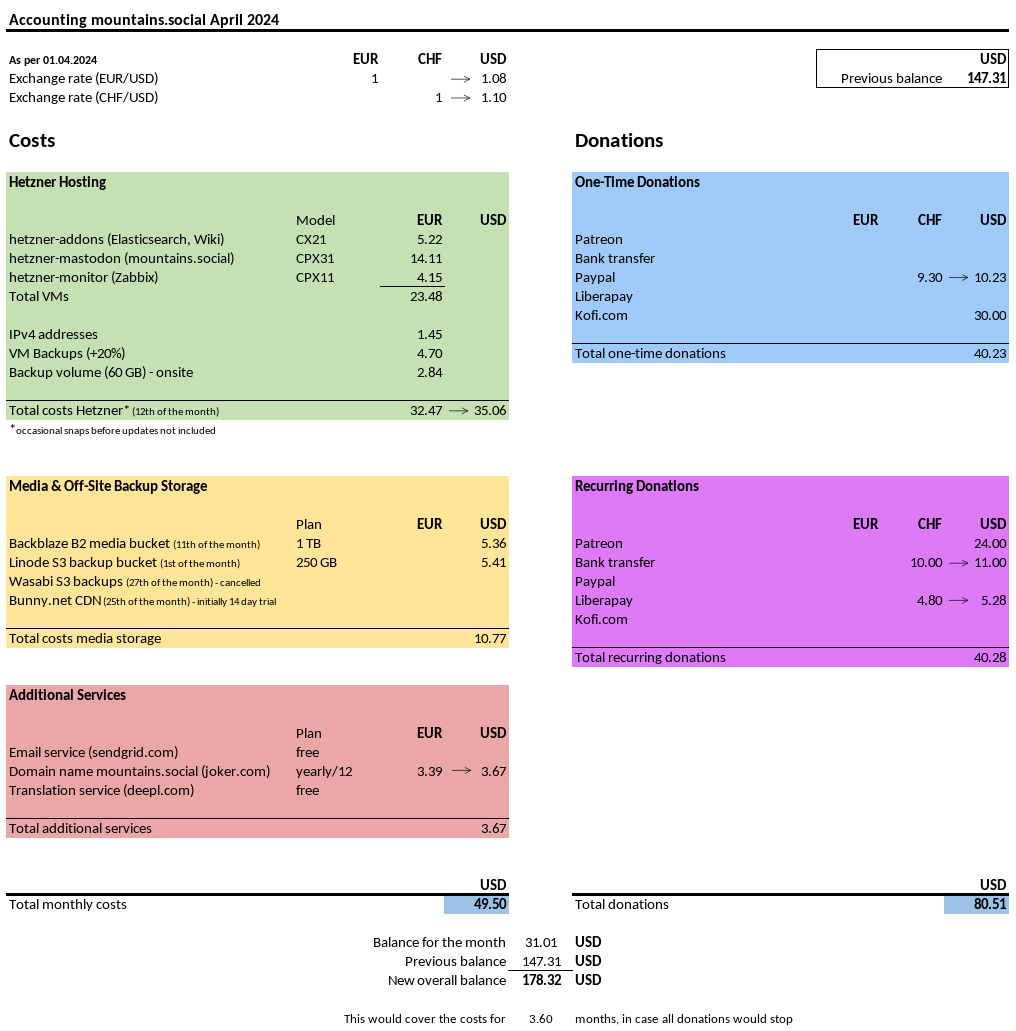

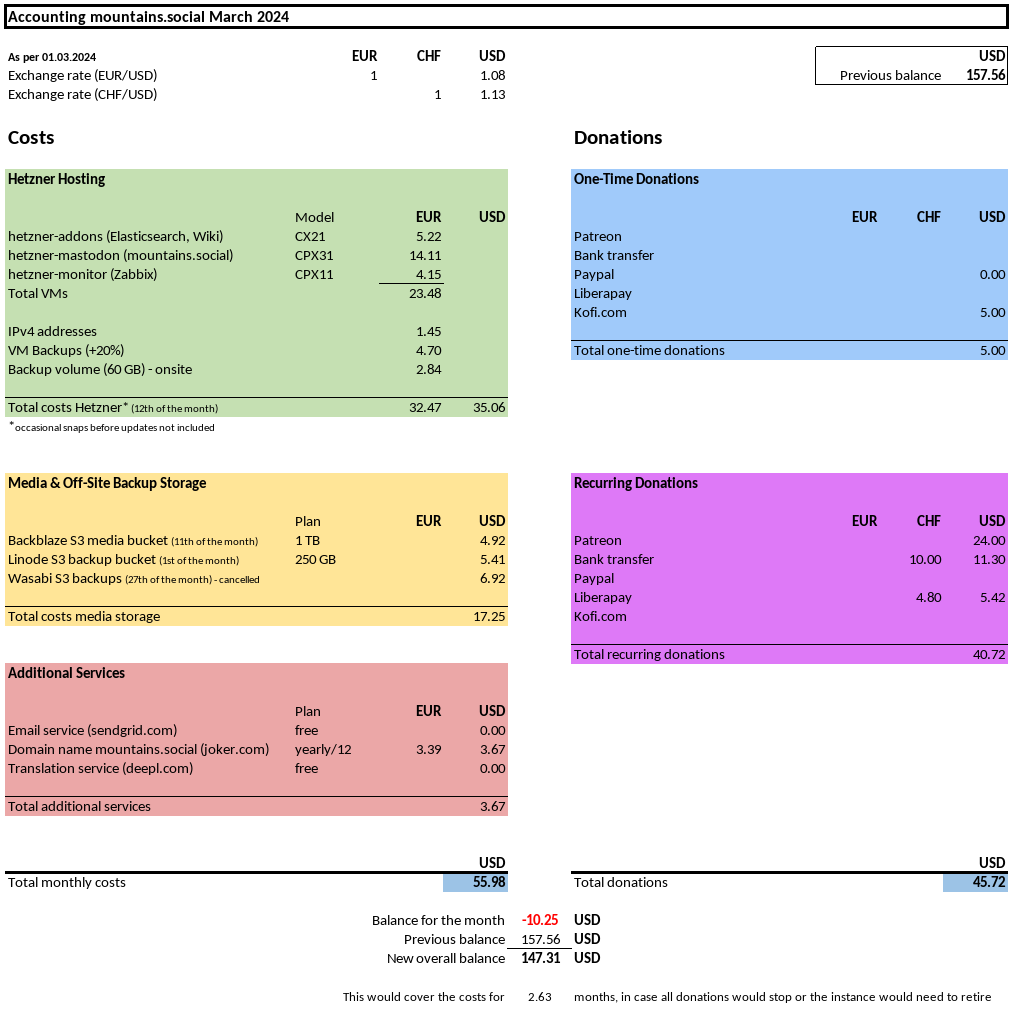

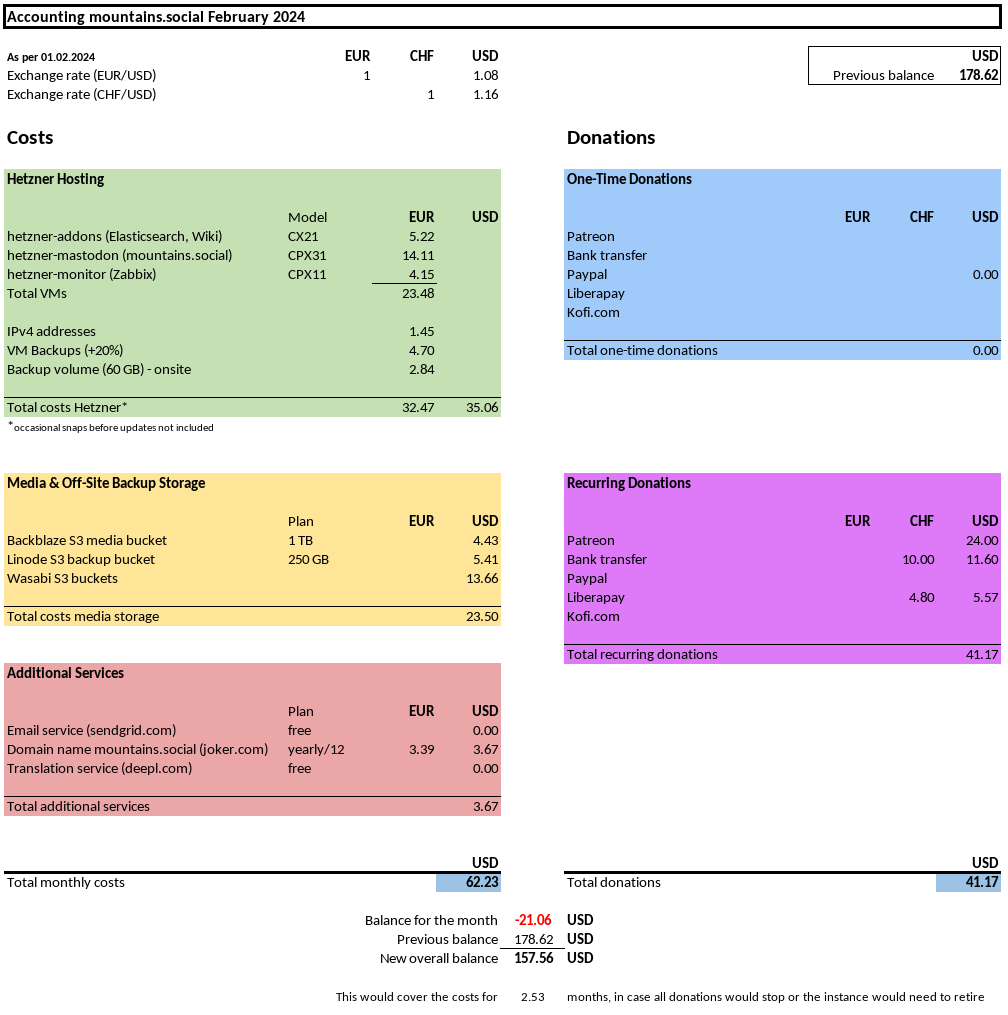

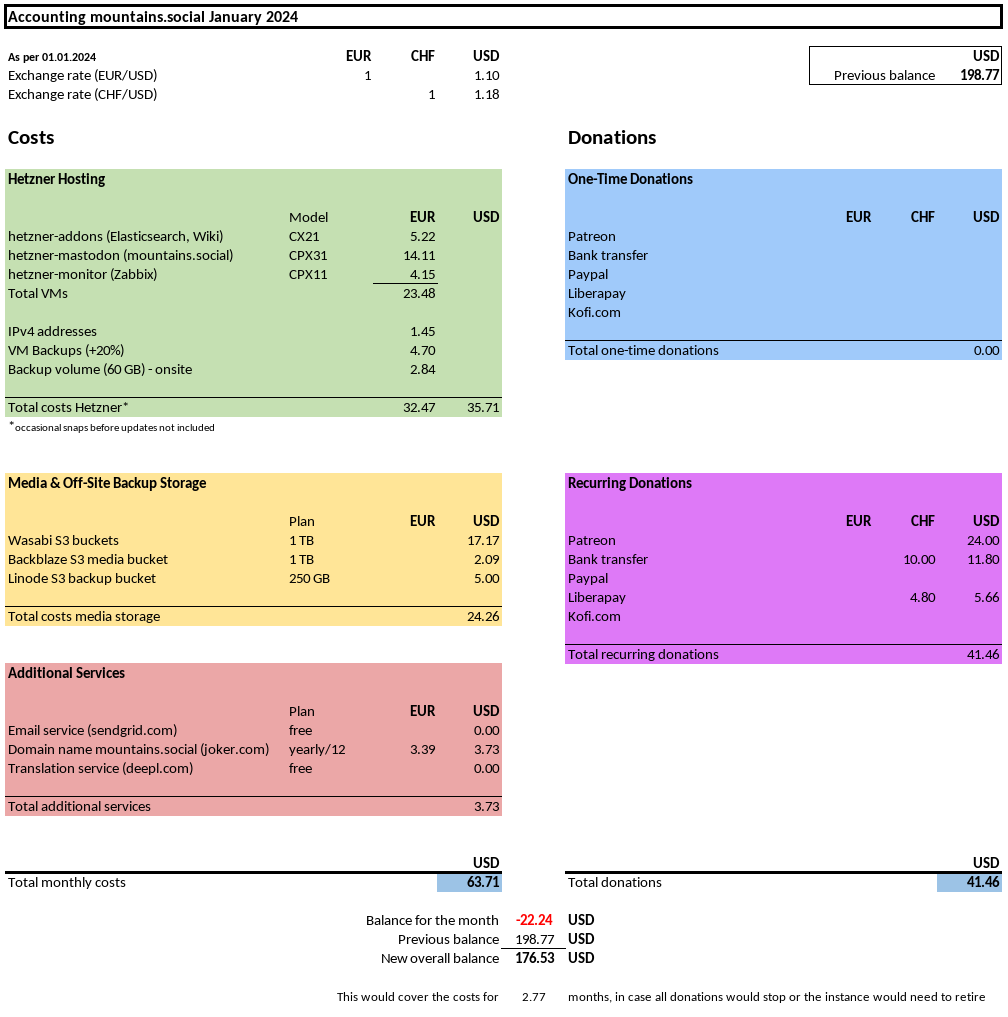

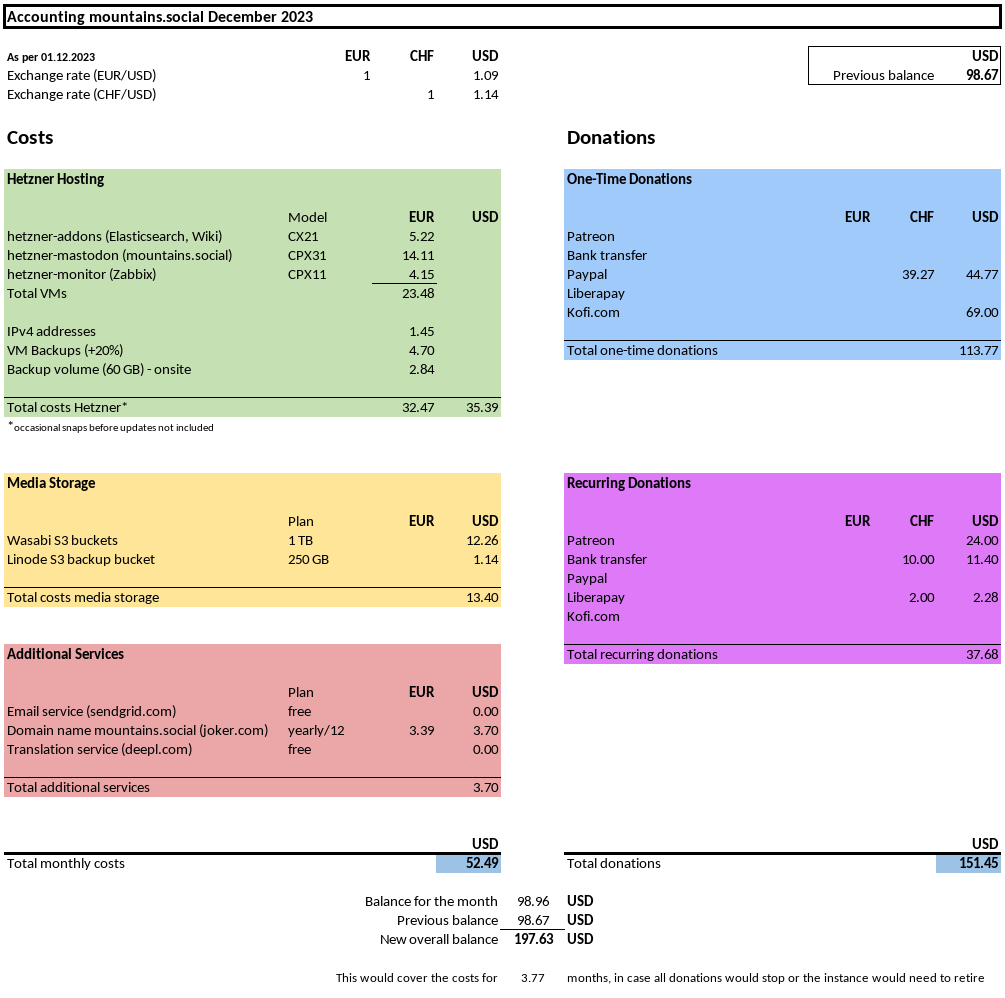

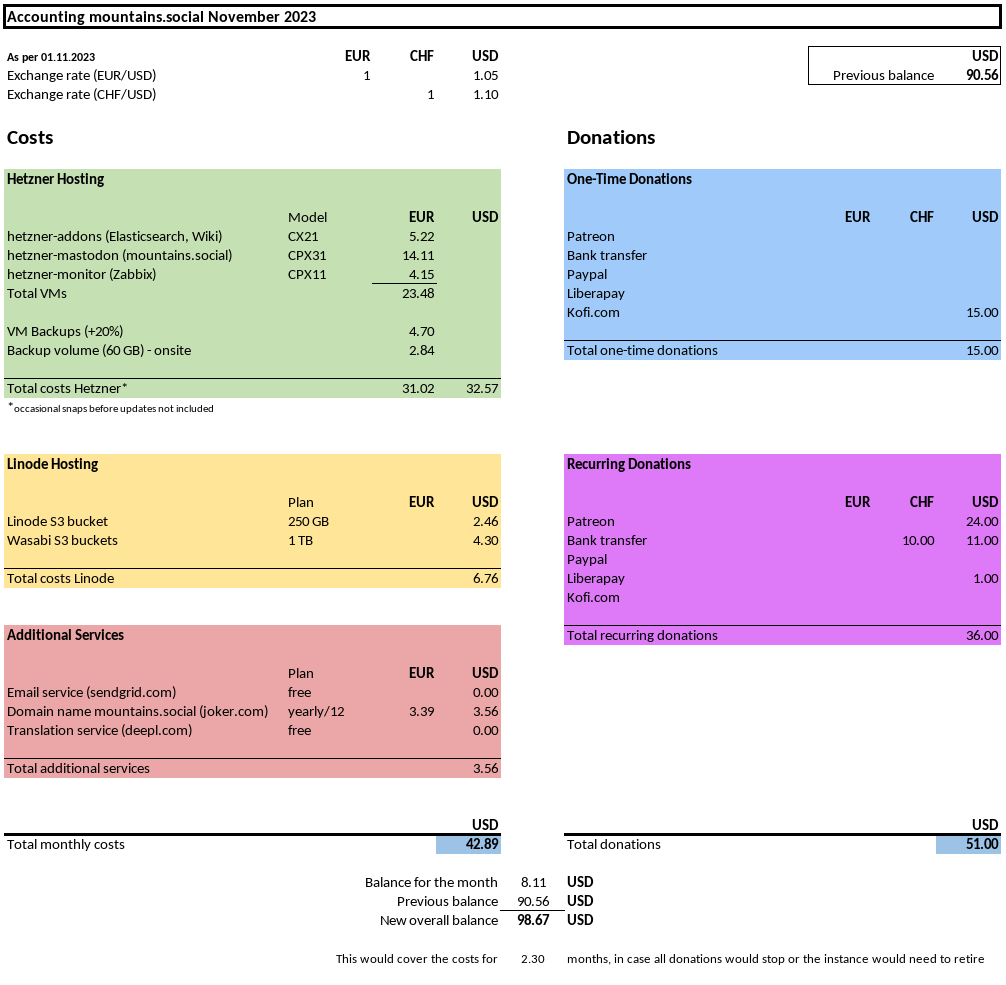

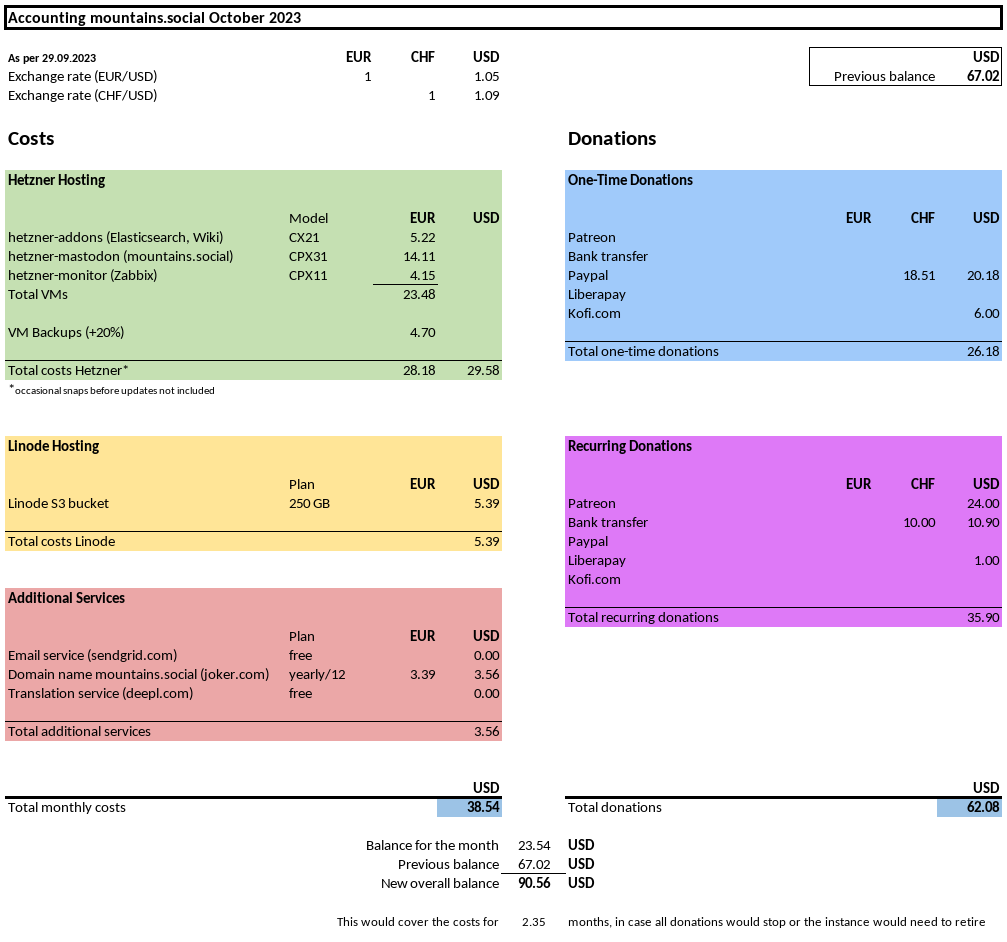

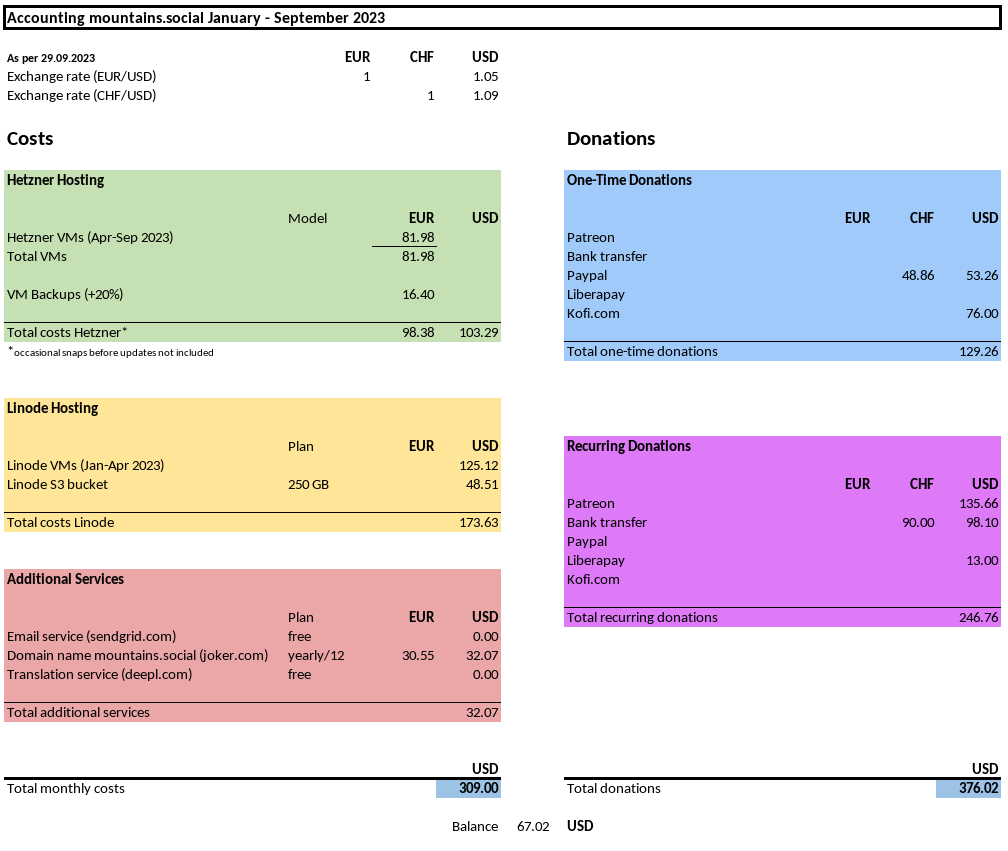

Accounting

With running a Fediverse instance comes costs for server, storage (media and backup) and other things like the domain. Currently mountains.social is still in the free plan for Deepl Translation and Brevo (for mail). Although it is not required to participate in these costs, various members are chipping in via recurring or one-time donations. To give an overview of the costs and donations, I will update these numbers on a monthly basis.

Mountains.social was opened to the community in December 2022. In September 2023 I started tracking the costs and donations more closely.

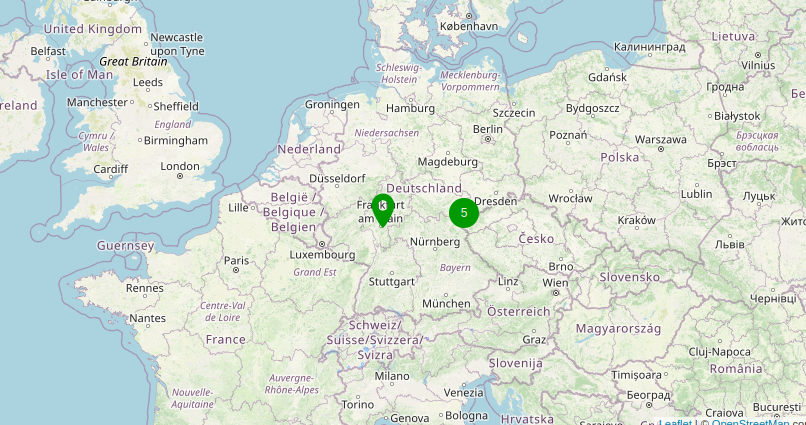

Peek in the technical stuff

The heart of the infrastructure is location at the Hetzner datacenter in Falkenstein, Germany. This is where the servers (running as virtual machines) are located, which make up mountains.social and mountains.photos. The media however that is uploaded by the members or comes in via federation is stored at the UpCloud datacenter in Frankfurt, Germany. This is also where the database and filesystem backups are stored. The media buckets at UpCloud on their turn are cloned to the Hetzner datacenter. In this way we have all required files stored in such way, that in case of a datacenter outage or company disaster we can recover (most) of the things.

The philosophy for the technical setup is "keep it simple". The instance must run robustly, but too many unnecessary technical stuff and tweaks will also increase the risk of failures. As long as the instance can have a simple setup, this will be the approach.

Server farm

Mountains.social is a modest-size Mastodon instance. This means the infrastructure can be kept simple. Nevertheless there are 4 servers, each having their own role.

hetzner-mastodon

This server has the main (PostgreSQL) database and the Mastodon server software. This is the core of the server farm. The "addons" and "monitor" servers could die without much impact to the instance (see hetzner-addons for the functionalities which would not be available).

| Hostname | hetzner-mastodon |

| Model | CX32 |

| CPU | 4 vCPU @ 2.294 GHz |

| Memory | 8 GB |

| Disk | 80 GB (local) + 80 GB (database) + 80 GB (local backups) |

| IPv4 | 142.132.227.141 |

| IPv6 | 2a01:4f8:c17:14db::1 |

| Functionalities | Mastodon |

hetzner-pixelfed

As the Mastodon server, also this Pixelfed server is using a PostgreSQL database. This system does not rely on the server with the addons. All software required that is needed, is locally on the system.

| Model | CX22 |

| CPU | 2 vCPU @ 2.294 GHz |

| Memory | 4 GB |

| Disk | 40 GB (local) |

| IPv4 | 49.13.114.95 |

| IPv6 | 2a01:4f8:c012:1a4::1 |

| Functionalities | Pixelfed |

hetzner-addons

As the name says, this server hosts addons as in "optional". These goodies are located on a different server to not affect the main instance in case of issues. The main addons is ElasticSearch, which provides the full-text search. It is known for being memory-hungry and should not be able to interfere with the memory of Mastodon. Also this wiki which you are reading now is running on this server (built on Grav). Finally it provides the remote operating access to all 4 servers.

| Model | CX22 |

| CPU | 2 vCPU @ 2.095 GHz |

| Memory | 4 GB |

| Disk | 40 GB (local) |

| IPv4 | 49.12.4.140 |

| IPv6 | 2a01:4f8:c17:805e::/64 |

| Functionalities | ElasticSearch |

| Wiki (via Grav in a Docker container) |

hetzner-monitor

This server provides the monitoring of all components on the mountains.social servers: metrics on the database, sidekiq, webserver, etc. as well as their availablity. Also the media and backup buckets at Backblaze and Linode are monitored from this server. The monitoring is done via Zabbix which has lots of integrations with server and software. Notifications in case of alerts is done via a self-hosted ntfy instance. This system is not reachable from the internet directly.

| Model | CX22 |

| CPU | 2 vCPU @ 2.294 GHz |

| Memory | 4 GB |

| Disk | 40 GB (local) |

| IPv4 | - |

| IPv6 | - |

| Functionalities | Zabbix |

Remote availability monitoring is also in place, which is not documented in further detail here.

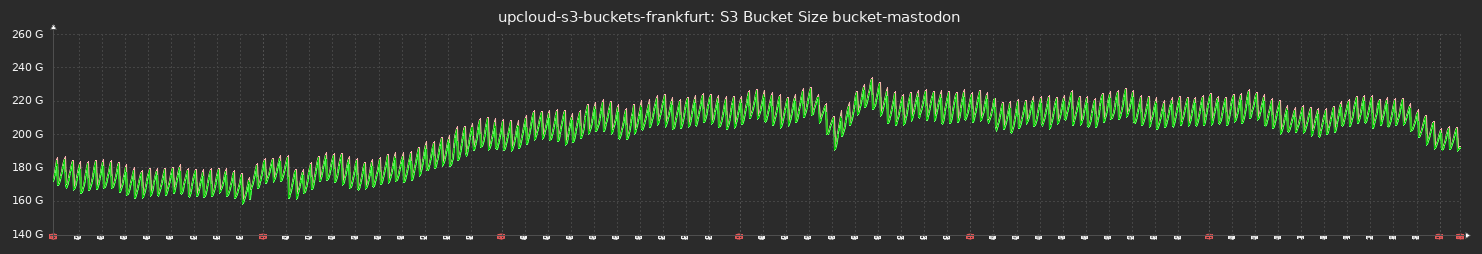

Media storage

All information and text from a post is saved in the Mastodon database. The included media however is saved outside of this database. This could be saved locally on the Mastodon server, but this would drive up costs significantly. Therefore the media for mountains.social is saved in S3 Object Storage buckets at UpCloud.

To give an impression from the size of the bucket, the below graph shows the history of the last 6 months (June - November 2024). The saw-tooth-like behaviour comes from the daily housekeeping jobs, that remove older remote media (remote means here media that came in via federation).

Media delivery

To improve the performance of the media delivery to the people, a Content Delivery Network (CDN) has been configured. The CDN which is used is Bunny.net. To balance costs and performance, the "Volume Network" is used. This network has nodes in the below 10 locations. The "Standard Network" of Bunny.net has nodes in 100+ locations, but would cost 3 to 4 times as much.

When posting media, this is not uploaded to one of the CDN nodes, but via the Mastodon server in Germany instead. Only the subsequent delivery of the media to the people is managed via the CDN.

Europe |

North America |

|---|---|

| 🇩🇪 Frankfurt | 🇺🇸 Los Angeles |

| 🇫🇷 Paris | 🇺🇸 Chicago |

| 🇺🇸 Dallas | |

| 🇺🇸 Miami |

Asia |

South America |

|---|---|

| 🇸🇬 Singapore | 🇧🇷 Sao Paolo |

| 🇯🇵 Tokyo | |

| 🇭🇰 Hong Kong | |

Backups

Backups are vital when running a service where a lot of people rely on. Sure, mountains.social is perhaps not the most important service on the internet, but it would be a shame nevertheless when people lose post, memories and connections to other people. Therefore backups on various level are scheduled, run and monitored.

Server Backups

The servers are running as virtual machines (VMs), which makes it easy to make a backup at server level. For every server a daily backup is running. It includes all static files as well as the database. As the database is constantly changing (and therefore also its files on the operating system), such a backup can't be used to recover the complete system. For the static files like configuration files and binaries however, this is perfect. Just before maintenance activities (depending on the type of maintenance), a separate manual backup is made. In case something goes wrong, this ensures the minimal amount of data-loss.

Database Backups

As mentioned above, the server backups can't be used to recover the database in case of failure as its files are inconsistent in the server backup. Therefore there is a daily database backup scheduled, which is dumped on a local filesystem. Afterwards is this database backup, together with the ~live directory (where all the Mastodon software and configuration is located) and further relevant configuration files sent to the S3 UpCloud backup bucket. The tool used here is Duplicati, which is installed locally on the Mastodon server.

Media backups

Where the backup of the database has multiple generations, the backup of the media bucket just has one. The backup is done via a daily rclone sync. When files are deleted from the media bucket (housekeeping), they are then also deleted from the backup bucket. New files are copied to the backup bucket. The main driver for just one backup are the storage costs and traffic.